MobiSys'21 | nn-Meter: Towards Accurate Latency Prediction of Deep-Learning Model Inference on Diverse Edge Devices

Introduction

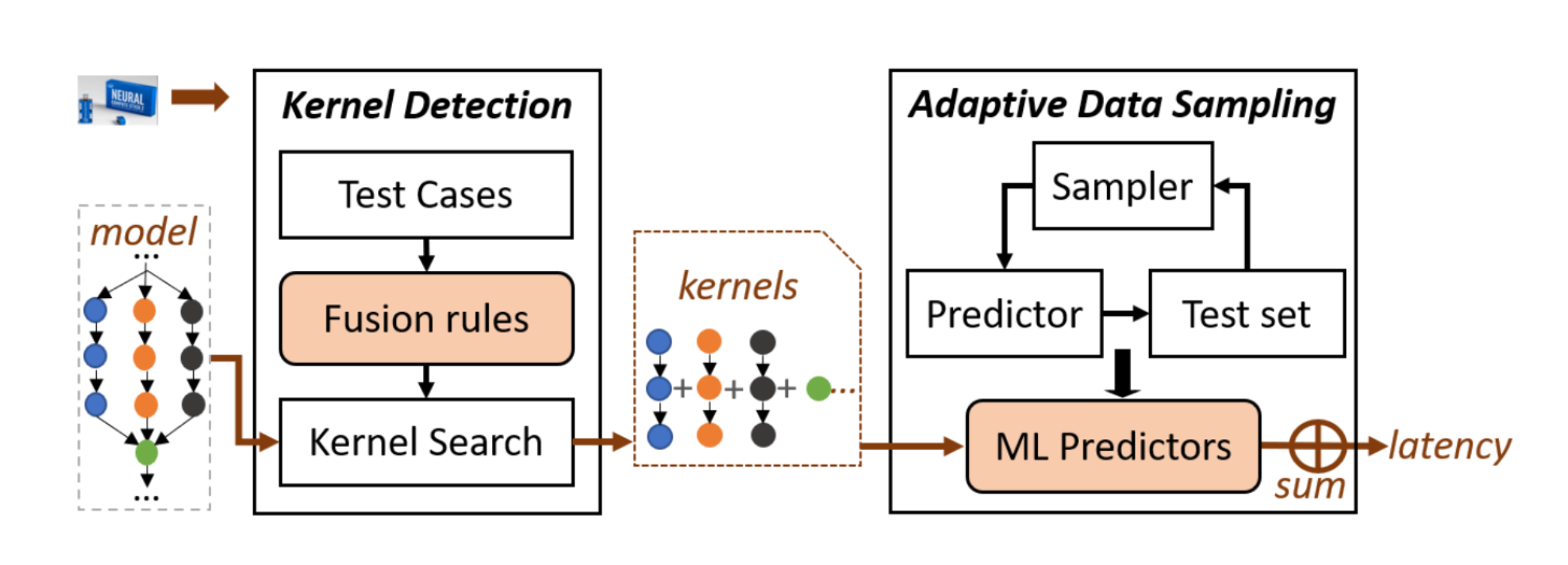

This paper won the Best Paper Award at MobiSys 2021. The paper proposes nn-Meter, a model inference time prediction system that can efficiently and accurately predict the inference latency of DNN models on different edge devices. The key idea is to divide the entire model into kernels (execution units on the device), and then perform kernel-level prediction.

Background

As more and more deep neural networks emerge, systems for predicting network inference performance need to be generalizable to adapt.

- If we want to perform model-level prediction, the search space is too large.

- If we use operator-level prediction, we cannot capture graph-level optimizations.

Deep learning inference frameworks typically undergo graph-level optimizations (such as operator fusion), which may or may not be backend-specific. To address this graph-level performance optimization, nn-Meter proposes a kernel-level prediction method to detect different fusion rules for different backends.

Motivation

- Kernels are the basic scheduling and execution units.

- In modern deep learning, the types of basic operators and kernels are quite limited. Kernel-level prediction can be general enough to support future new network architectures.

Challenges

- How to partition a model into different kernels on different devices. Since many devices have runtime optimizations, the kernels on different devices are different.

- How to predict the performance (latency) of a kernel. Kernels have high-dimensional configurable parameters, the prediction search space is large, and kernel optimizations vary across different devices.

Method Design

nn-Meter performs kernel-level prediction based on kernel detection and adaptive data sampling. The output of backend-agnostic optimizations from open-source frameworks (e.g., Tensorflow) serves as input to the prediction process.

Basic Assumptions

Kernels run sequentially. Edge devices don’t have many resources to run multiple kernels in parallel, and running multiple kernels concurrently doesn’t provide much benefit. Therefore, the predicted time can be viewed as the sum of the kernel’s expected running times.

Evaluation Dataset

Build a dataset suitable for kernel detection and NAS with a wide range of predictions. Twelve SOTA CNN models were collected on ImageNet2012. More than 2,000 variants were generated for each model based on output channel size and kernel size. Additionally, researchers selected 2,000 models with the highest test accuracy on CIFAR10 from NASBench201, each with a different set of connection structures.

Kernel Detection

Used to partition the target model into a set of kernels.

Detection Challenges

- Many edge platform backend inferences are closed-source

- CNN models are arbitrary

Fusion Principles

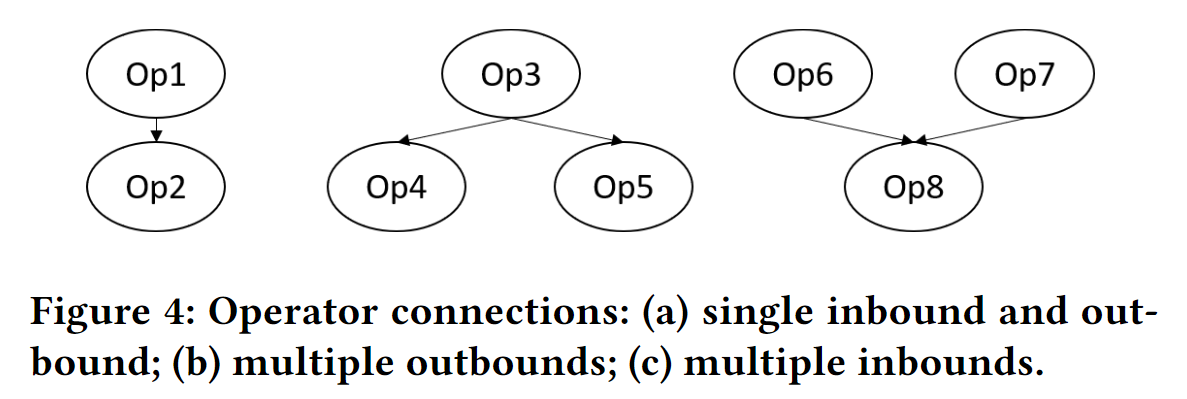

Both the type of operators and the connection method of operators affect kernel fusion.

Type: Operators like activation functions are easily fused.

Connection method: As shown in Figure 4:

- For type (a) operators, fusion is easy

- For type (b) operators, if operators 3, 4, and 5 are fused, that’s naturally best. If only operators 3 and 4 are fused, both the outputs of operators 3 and 4 need to be preserved.

- For type (c) operators, if only operators 6 and 8 are fused, it won’t bring extra overhead.

Need to ensure fusion doesn’t create circular dependencies.

Test cases

Test cases need to cover operator types and connections to detect fusion rules for different backends. For single-input single-output cases, if the following inequality is satisfied, operators 1 and 2 are fused. Where $\alpha$ is a parameter, set to 0.5 in experiments.

$$ T_{O p 1}+T_{O p 2}-T_{(O p 1, O p 2)}>\alpha * \min \left(T_{O p 1}, T_{O p 2}\right) $$

For multi-input multi-output models, choose the combination closest to the actual running time among $T_{O p 6}+T_{O p 7}+T_{O p 8}$, $T_{O p 6 \# O p 8}+T_{O p 7}$, $T_{O p 6}+T_{O p 7 \# O p 8}$, then use it as the fusion rule.

These detected fusion rules are recorded in JSON files. These records can be easily read by other algorithms or updated with new records.

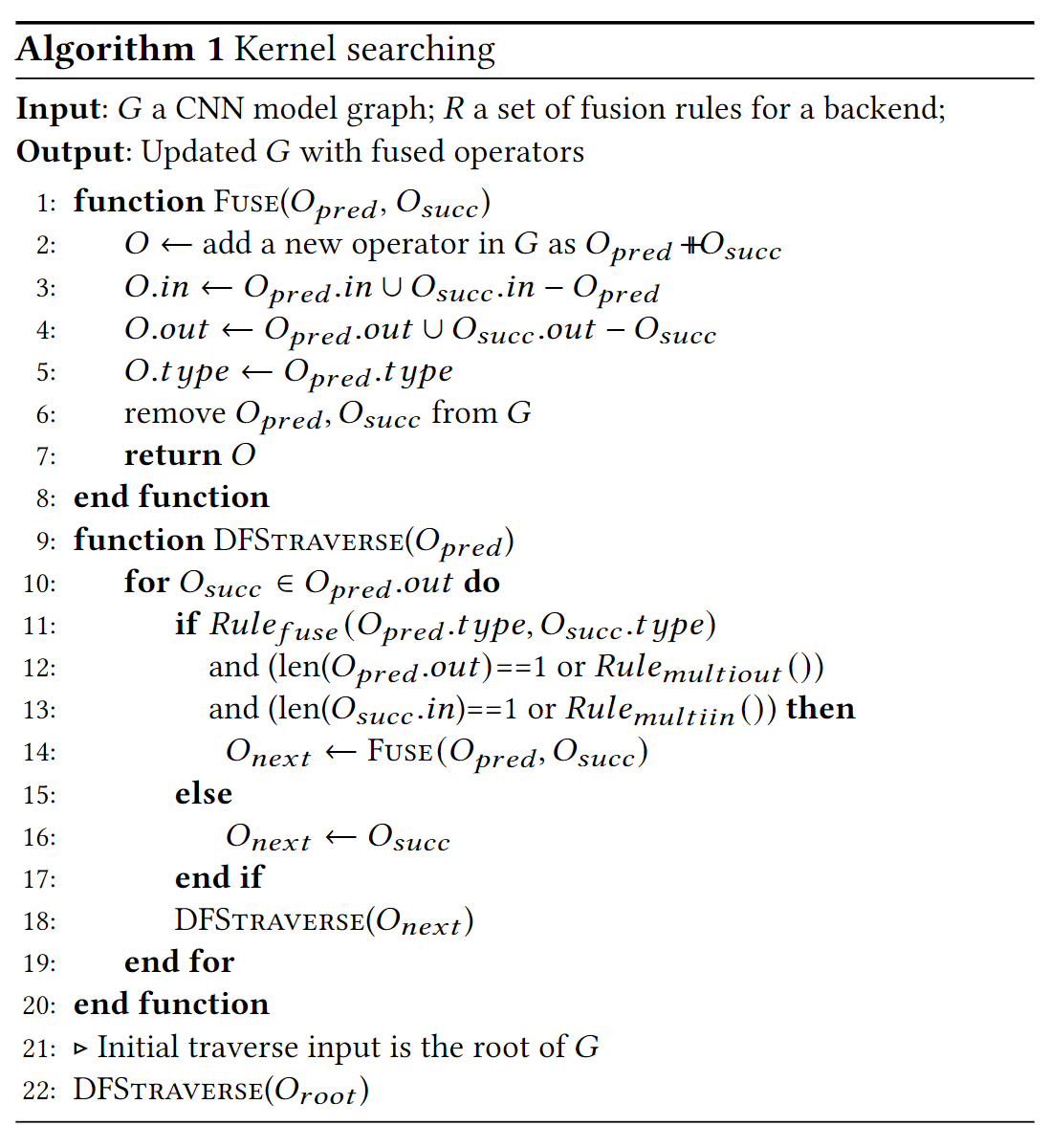

Fusion Algorithm

A DFS algorithm is used to search for operators that can be fused.

The original paper has more specific examples demonstrating this algorithm.

Adaptive Data Sampling

According to the authors’ experiments, latency doesn’t change linearly with certain parameters (output channels), but rather shows “steps”. Therefore, this paper proposes an adaptive data sampling algorithm to collect more data near these “step points”.

Results

Compared to linear regression using FLOPs and FLOPs + memory access cost (MAC) data and BRP-NAS, the method proposed in this paper shows significant improvements.

nn-Meter performs very well on CPUs and GPUs. However, the performance on VPUs is slightly worse, especially for models with either very high or very low inference latency. Reasons: Models with low inference latency are very sensitive to kernel prediction errors, and VPUs have special optimizations for some large-configuration operators.

Microbenchmarks show that both kernel-level prediction and adaptive sampling are very effective.

When using a predictor trained on Adreno 640 GPU to perform inference on Adreno 630 GPU, models that are not convolution-dominated achieve good accuracy. However, for convolution-dominated models, the predictor’s cross-device generalization ability is poor.

Discussion

- Only supports CNN models, not convolutional models.

- The backend is still a black box; for backend changes, it’s necessary to rebuild and retrain the predictor.

- The predictor is offline; can it be made online?

- How to redesign for multiple kernels executing simultaneously?

- Power prediction?