RTSS'17 | GPU Scheduling on the NVIDIA TX2: Hidden Details Revealed

-

CUDA Programming Fundamentals

-

GPU Architecture

Abstract

This paper configured multiple experiments to explore the rules of the GPU kernel-level scheduling.

Motivation

Today, GPUs play an important role in autonomous driving. Realizing full autonomy in mass-production vehicles will necessitate stringent certification processes. Currently, GPUs tend to be closed-source “black boxes” that have not publicly disclosed features. For certification to be tenable, such features must be documented.

One key aspect of certification is the validation of real-time constraints. The reason is that embedding systems tend to be less capable than desktop systems. As a result, it is crucial to utilize the available GPU processing capacity fully. However, avoiding wasted GPU processing cycles can be difficult without detailed knowledge of how a GPU schedules its work.

So, it is meaningful to figure out the scheduling details of the GPU.

Method

This paper presents an in-depth study of GPU scheduling on an exemplar of current GPUs targeted toward autonomous systems. This study was conducted by using only black-box experimentation and publicly available documentation.

Experiment Environment

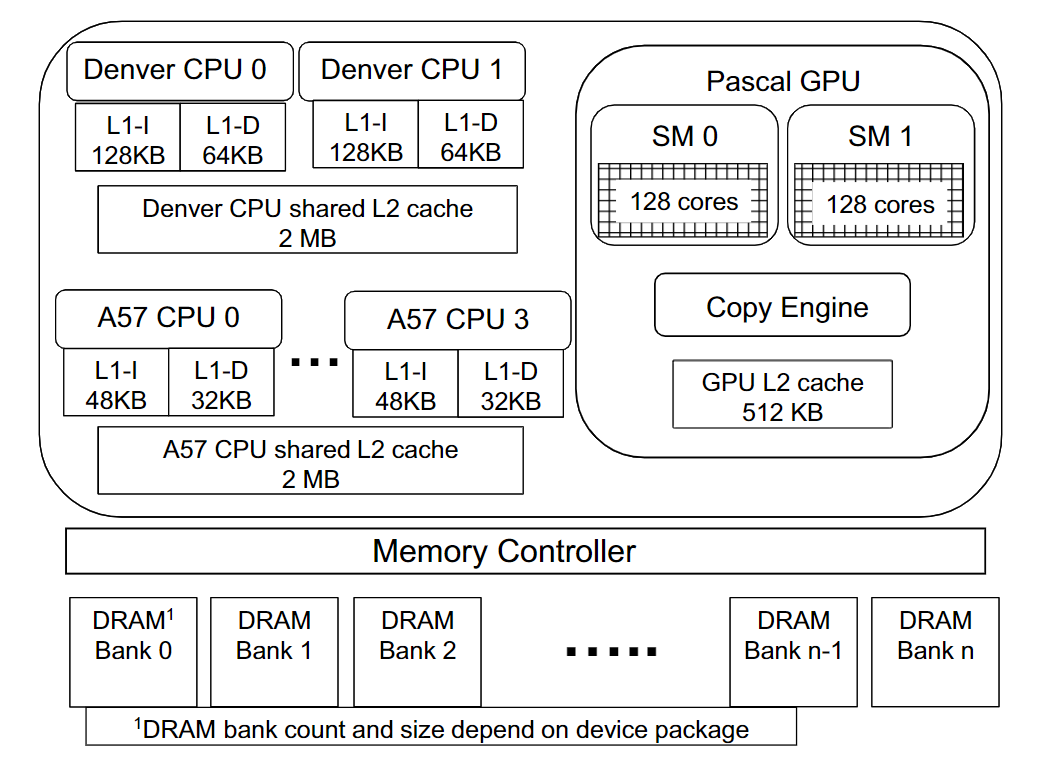

The paper used NVIDIA TX2 as its hardware platform. The SoC design of TX2 is shown below figure.

Rules Observation

In this section, this paper presents GPU scheduling rules for the TX2, assuming the GPU is accessed only by CPU tasks that share an address space.

The paper hypothesize that several queues are used: one FIFO EE (execution engine) queue per address space, one FIFO CE (copy engine) queue that is used to order copy operations for assignment to the GPU’s CE, and one FIFO queue per CUDA stream (including the NULL stream, which we consider later).

General scheduling rules

- A copy operation or kernel is enqueued on the stream queue for its stream when the associated CUDA API function (memory transfer or kernel launch) is invoked.

- A kernel is enqueued on the EE queue when it reaches the head of its stream queue.

- A kernel at the head of the EE queue is dequeued from that queue once it becomes fully dispatched.

- A kernel is dequeued from its stream queue once all of its blocks complete execution.

Non-preemptive execution

- Only blocks of the kernel at the head of the EE queue are eligible to be assigned. (Kernels with the same priority)

Rules governing thread and shared-memory resources

- A block of the kernel at the head of the EE queue is eligible to be assigned only if its resource constraints are met.

- A block of the kernel at the head of the EE queue is eligible to be assigned only if there are sufficient thread resources available on some SM.

- A block of the kernel at the head of the EE queue is eligible to be assigned only if there are sufficient shared-memory resources available on some SM.

Copy operations

- A copy operation is enqueued on the CE queue when it reaches the head of its stream queue.

- A copy operation at the head of the CE queue is eligible to be assigned to the CE.

- A copy operation at the head of the CE queue is dequeued from the CE queue once the copy is assigned to the CE on the GPU.

The NULL Stream

Available documentation makes clear that two kernels cannot run concurrently if, between their issuances, any operations are submitted to the NULL stream.

- A kernel Kk at the head of the NULL stream queue is enqueued on the EE queue when, for each other stream queue, either that queue is empty, or the kernel at its head was launched after Kk.

- A kernel Kk at the head of a non-NULL stream queue cannot be enqueued on the EE queue unless the NULL stream queue is either empty or the kernel at its head was launched after Kk.

Stream Priorities

Now consider how the usage of prioritized streams.

The paper hypothesizes that the TX2’s GPU scheduler includes one additional EE queue for priority-high kernels.

- A kernel can only be enqueued on the EE queue matching the priority of its stream.

- A block of a kernel at the head of any EE queue is eligible to be assigned only if all higher-priority EE queues (priority-high over priority-low) are empty.