OSDI'18 | Gandiva: Introspective Cluster Scheduling for Deep Learning

Abstract

This paper introduce Gandiva, a new cluster scheduling framework that utilizes domain-specific knowledge to improve latency and efficiency of training deep learning models in a GPU cluster. To improve the efficiency of the cluster, Gandiva proposed (1) Suspend-Resume/Packing; (2) Migration; (3) Grow-shrink; to make use of the characteristics of Deep Learning Training jobs. Gandiva first places the jobs by reactive mode greedy. Then Gandiva uses the introspective mode to find more potential of the cluster.

Background & Motivation

Deep Learning (DL) is feedback-driven exploration. DL jobs are compute-intensive. GPUs are the most popular hardware of DL today.

A traditional scheduler that treats a job as a black-box and schedule job on a GPU exclusive will hence achieve sub-optimal cluster efficiency. In addition, If we schedule a job on a GPU exclusively, job will hold it until completion, even lead to High Latency (head-of-line blocking). However, DL jobs have a lot of unique characteristics that can make the schedule more reasonable and effective. These characteristics are listed as below:

- Deep Learning Training (DLT) jobs is feedback-driven exploration. Users typically try several configurations of a job(a multi-job), and use early feedback from these jobs todecide whether to prioritize or kill some subset of them.

- Jobs widely differ in terms of memory usage, GPU core utilization, sensitivity to interconnect bandwidth, and/or interference from other jobs. In other words, some jobs might be sensitive to the intra-server locality (same PCIe Switch, same Socket and different Socket) and/or inter-server locality and/or might interfere with each other, while some jobs might not be affected.

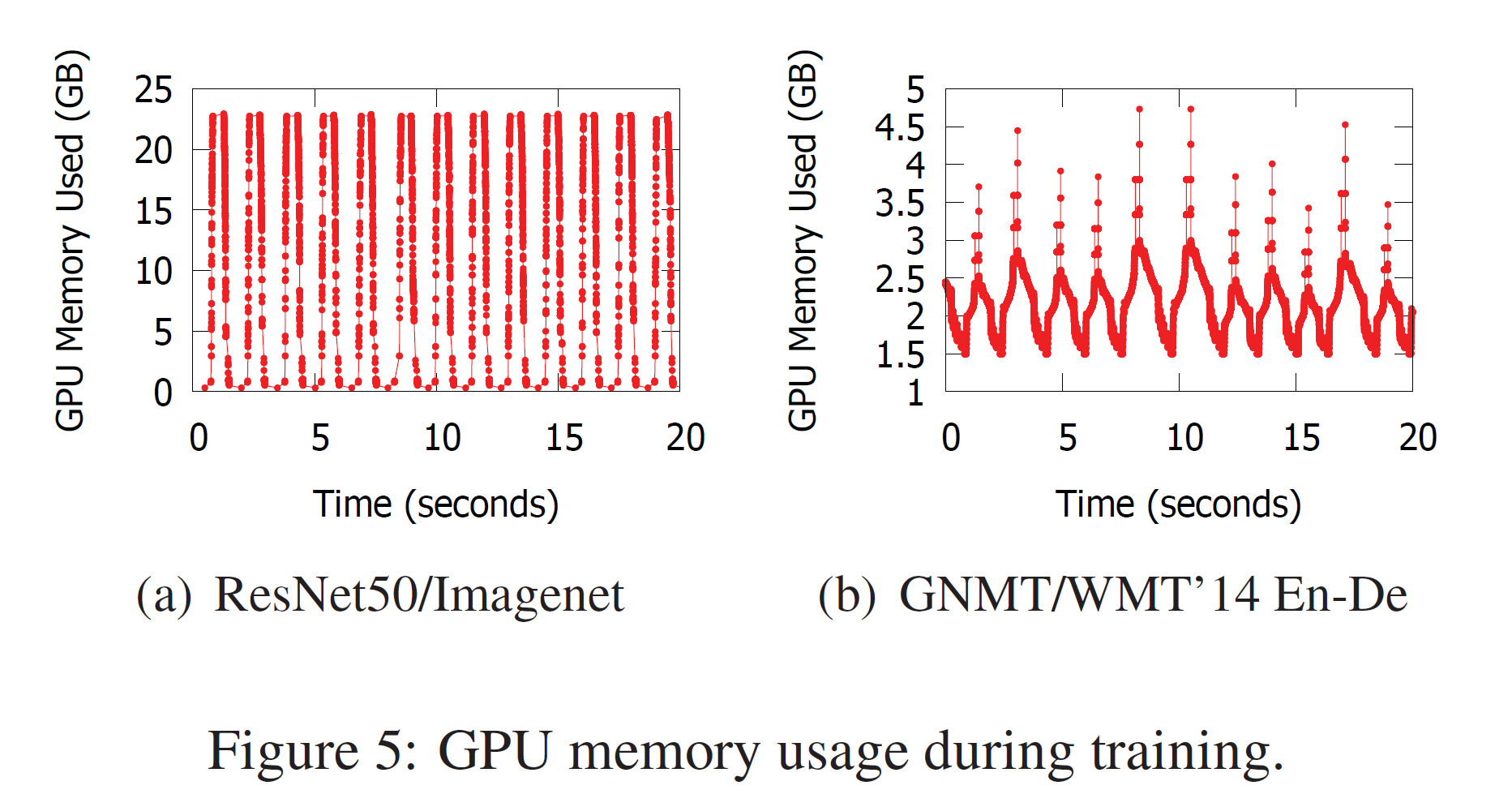

- DLT job is comprised of millions of similar, clearly separated mini-batch iterations, which shows the cyclic predictability. The GPU memory used clearly follows a cyclic pattern. Figure 5 are examples.

Contributions

- This paper illustrate various unique characteristics of the deep learning workflow and map it to specific requirements needed for cluster scheduling.

- The paper identify generic primitives that can be used by a DLT job scheduling policy, and make primitives such as time-slicing and migration an order of magnitude more efficient and practical by leveraging DL-specific knowledge of intra-job periodicity.

- The paper propose and evaluate a new introspective scheduling framework that utilizes domain-specific knowledge of DLT jobs to refine its scheduling decision continuously, thereby significantly improving early feedback time and delivering high cluster efficiency.

Approaches

Mechanisms

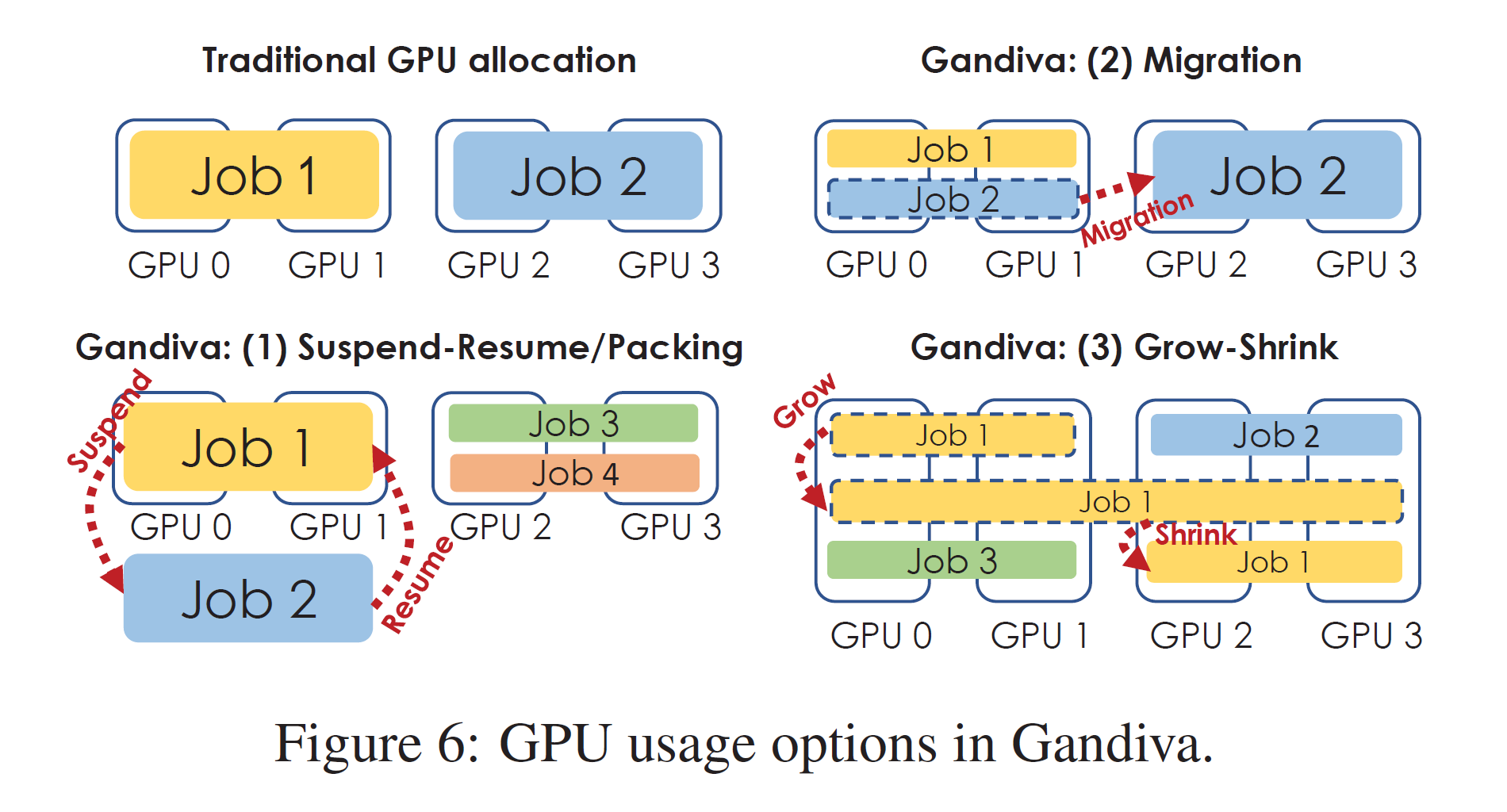

The mechanisms are showed on Figure 6.

- Suspend-Resume. Gandiva exploit DL cyclic behavior and suspend-resume DLT jobs when their GPU memory usage is at their lowest. Therefore, the cost of suspend-resume can be greatly reduced. Gandiva leverages suspend-resume and adds custom support for GPU time-slicing, which is similar to traditional OS. Using time-slicing can avoid head-of-line blocking.

- Packing. An alternative to suspend-resume for time-slicing is to run multiple DLT jobs on a GPU simultaneously and let the GPU time-share the jobs. We call this packing.

- Migration. Migration can improve the locality of DLT jobs.

- Grow-Shrink. To improve GPU utilization when GPU is relatively free.

- Profiling. Like any scheduler, Gandiva monitors resource usage such as CPU and GPU utilization, CPU/GPU memory, etc. However, what is unique to Gandiva is that it also introspects DLT jobs in an application-aware manner to estimate their rate of progress. Gandiva estimates a DLT job’s

mini_batch_time, the time to do one forward/backward pass over a batch of input data, as the time taken between two minimums of the GPU memory usage cycles (Figure 5(a)). For example, consider the example of packing two DLT jobs in a GPU described earlier. By comparing themini_batch_timeof each of the two DLT jobs beforeand after packing, Gandiva can decide whether packingis effective.

Scheduling Policy

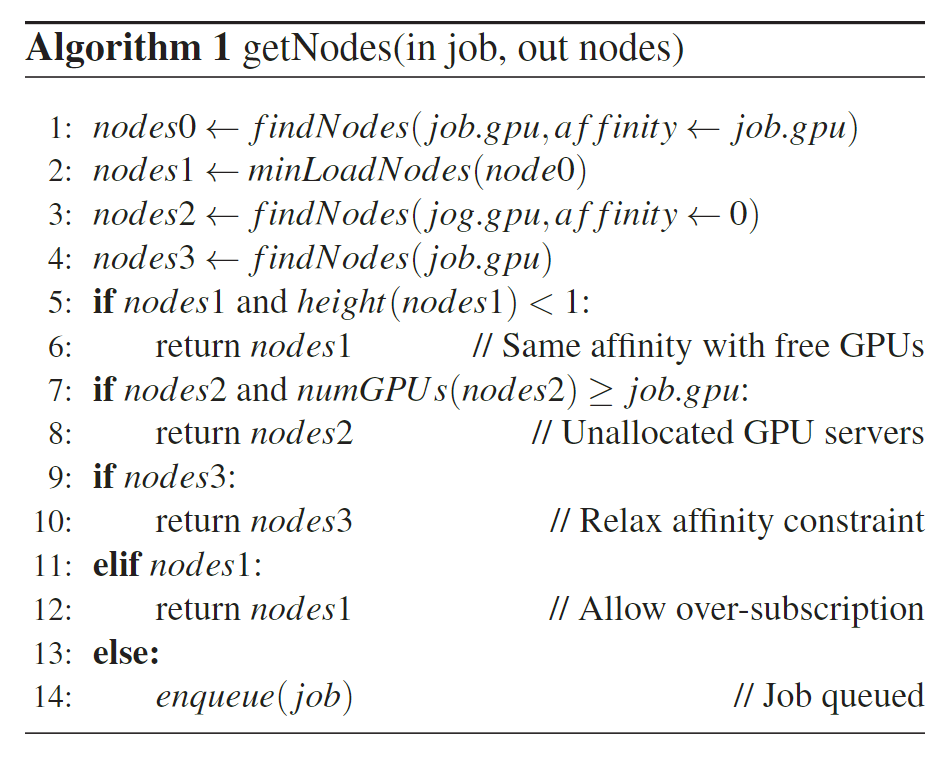

The height of a server as $⌈M/N⌉$, where $M$ is the number of allocated GPUs and $N$ is the numberof total GPUs. Thus, the suspend/resume mechanism will only be used when the height of a server exceeds one. The height of a cluster is defined as the maximum height of all its servers.

The affinity of a server as the type of jobs (based on GPUs required) assigned to that server. For example, initially servers have affinity of zero and, if a job that requires two GPUs is assigned to a server, the affinity of that server is changed to two. This parameter is used by the scheduler to assign jobs with similar GPU requirements to the same server.

The primary design goal of the Gandiva scheduler is to provide early feedback to jobs. In prevalent schedulers, jobs wait in a queue during overload. In contrast, Gandiva supports over-subscription by allocating GPUs to a new job immediately and using the suspend-resume mechanism to provide early results.

A second design goal is cluster efficiency. This is achieved through a continuous optimization process that uses profiling and a greedy heuristic that takes advantage of mechanisms such as packing, migration, and grow-shrink.

To achieve these goals, the Gandiva scheduler operates in two modes: reactive and introspective. Note that the scheduler can be operating in both modes at the same time.

Reactive Mode

Reactive mode refers to when the scheduler reacts to events such as job arrivals, departures, machine failures etc. When a new job arrives, the scheduler allocates servers/GPUs for the job. The node allocation policy used in Gandiva is shown in Algorithm 1. Line 1 might result in Figure 7, jobs that require 1-GPU are placed together but jobs that require 2 or 4 GPUs are placed on different servers

Introspective Mode

By introspective mode, Gandiva refer to a continuous process where the scheduler aims to improve cluster utilization and job completion time.

Packing

Based on the profiling data, the scheduler maintains a list of jobs sorted by their GPU utilization. The scheduler greedily picks the job with the lowest GPU utilization and attempts to pack it on a GPU with the lowest GPU utilization.

Packing is deemed successful when the total throughput of packed jobs is greater than time-slicing. If packing is unsuccessful, Gandiva undo the packing and try the next lowest utilization GPU. If the packing is successful, Gandiva find the next lower utilization job and repeat this process.

Migration

To improve locality, Gandiva pick jobs that are notco-located and try to find a new co-located placement.

Additionally, for de-fragmentation, Gandiva pick the server with the most free GPUs among all non-idle ones. Gandiva then try to move the jobs running on that server to others. The job will be migrated to another server with fewer free GPUs, as long as there is negligible performance loss. Gandiva repeat this until the number of free GPUs on every non-idle server is less than a threshold (3 out of 4 in the paper experiments) or if no job will benefit from migration.

Grow-shrink

Gandiva only grow jobs to use up to the max-imum number of GPUs available in a single server. Further, we trigger growth only after an idle period to avoid thrashing and shrink immediately when a new job mightrequire the GPUs.

Time-slicing

Gandiva support round robin scheduling in each server to time-share GPUs fairly. When jobs have multiple priority levels, higher priority jobs will never be suspended to accommodate lower priority jobs. If a server is fully utilized with higher priority jobs, the lower priority job will be migrated to another server, if feasible.

Implementation

DLT jobs are encapsulated as Docker containers containing our customized versions of DL toolkits and aGandiva client. These jobs are submitted to a Kubernetes system. Gandiva also implements a custom scheduler that then schedules these jobs.

Evaluation

Achieve higher throughput and cluster utilization on DLT jobs. Gandiva can also explore more configs in parallel so that AutoML has a great speedup.