OSDI'20 | AntMan: Dynamic Scaling on GPU Clusters for Deep Learning

Abstract

In this paper, the authors introduced AntMan, a system to accommodate the fluctuating resource demands of deep learning training jobs. AntMan utilizes the spare GPU resources to co-execute multiple jobs on a shared GPU. AntMan exploits unique characteristics of deep learning training to introduce dynamic scaling mechanisms for memory and computation within the deep learning frameworks. This allows fine-grained coordination between jobs and prevents job interference. Evaluations show that AntMan improves the overall utilization in the multi-tenant cluster without compromising fairness.

Background & Motivation

DL-production training jobs cannot fully utilize all the GPU resources throughout their execution. A large part of the time will be spent networking when we use distributed learning. In addition, multi-GPU training jobs require gang-scheduling, which means a job will not start training unless all required GPUs are simultaneously available. Idle waiting for gang-schedule wastes a lot of GPU cycles.

Packing jobs on shared GPUs can boost GPU utilization and make the same cluster accomplish more jobs overall. However, this approach is rarely used in production clusters. The reason is that although improving GPU utilization is beneficial, it is also critical to guarantee the performance of important resource-guarantee jobs (i.e., jobs with resource quota). The job packing strategy can introduce memory contention on concurrent jobs, which interferes with resource-guarantee jobs or even makes them fail.

Hence, AntMan is proposed for packing jobs with new mechanisms, which include Dynamic Scaling in DL Frameworks and Collaborative Scheduler. While providing performance guarantee on resource-guarantee jobs, AntMan dispatches opportunistic jobs to best-effort utilize GPU resources at a low priority without any resource guarantee.

Design

The design of AntMan includes three parts.

Dynamic Scaling in DL Frameworkscan make the jobs execute at their minimal requirements to prevent GPU memory usage outbreak failures and adapt to the fluctuating computation unit usage to limit potential interference.- Co-design the cluster scheduler and DL frameworks to leverage the dynamic scaling mechanisms for

Collaborative Scheduling. Scheduling Policyis introduced to schedule the DLT jobs efficiently.

Dynamic Scaling in DL Frameworks

It is necessary to dynamically scale the GPU memory and computation resource to guarantee the resource-guarantee jobs have enough resources and reduce the interferences between different jobs.

Memory Management

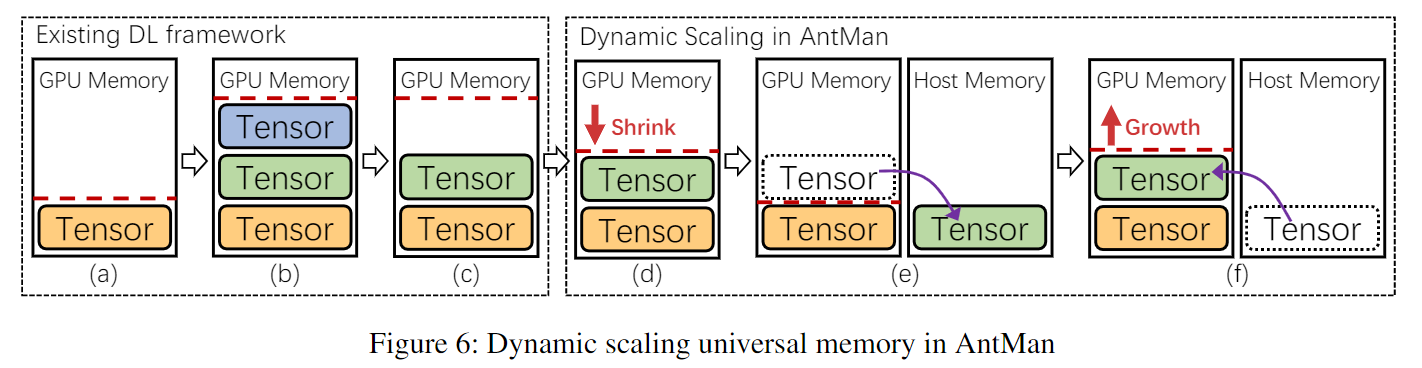

The difference in memory management between the conventional DL framework and AntMan is shown in Fig. 6. To eliminate the expensive overheads in memory allocations and de-allocations and speed up training among mini-batches, the GPU memory is cached in a global memory allocator inside conventional DL frameworks (i.e., PyTorch and TensorFlow) after tensors are destroyed (Fig. 6a-6c).

AntMan turns to the approach of scaling the GPU memory upper limit. It proactively detects in-used memory to shrink the cached memory to introspectively adjust GPU memory usage to an appropriate fit. This is done by monitoring application performance and memory requirements when processing mini-batches (Fig. 6d). Besides, tensors can be allocated outside of GPU with the host memory if GPU memory is still lacking (Figure 6e) and be allocated back to GPU automatically when the GPU memory’s upper limit increases (Figure 6f)..

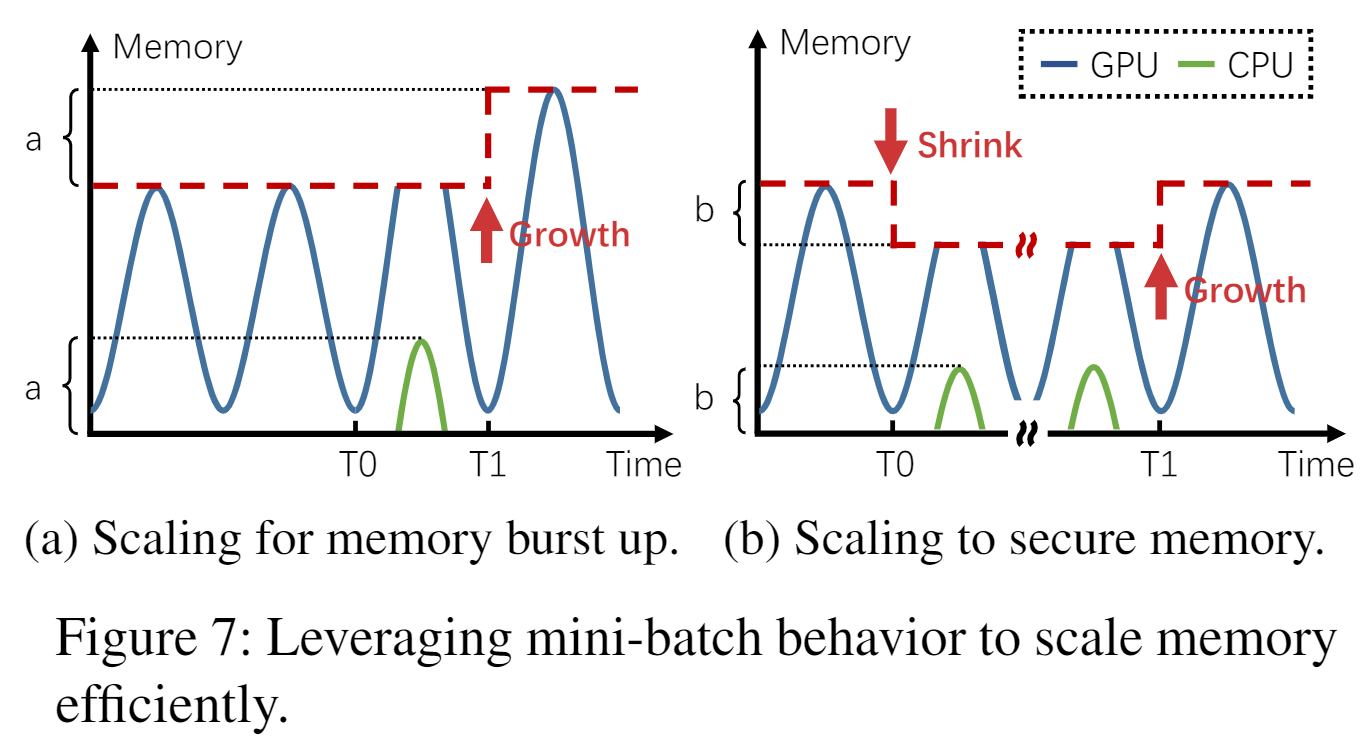

Antman is achieved by allocating universal memory to DL application tensors, i.e., switching tensors between GPU and CPU host machine DRAM across mini-batches (Fig. 7).

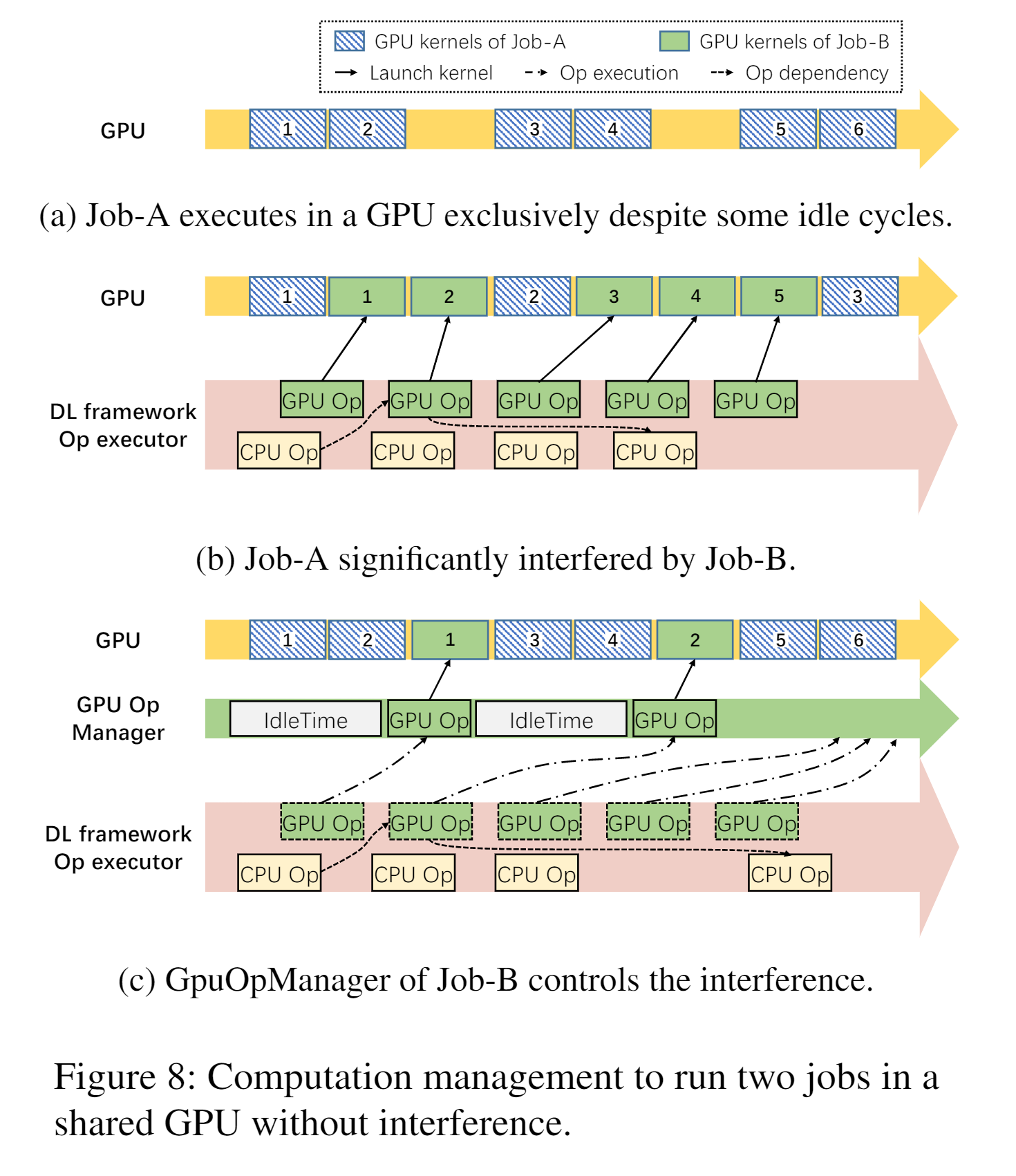

Computation Management

When multiple DL jobs are launched on the same GPU, the interference is mainly caused by the potential GPU kernel queuing delay and PCIe bus contention.

AntMan introduced GPUOpManager to dispatch the operators, which aims to control the interference between jobs (Fig. 8).

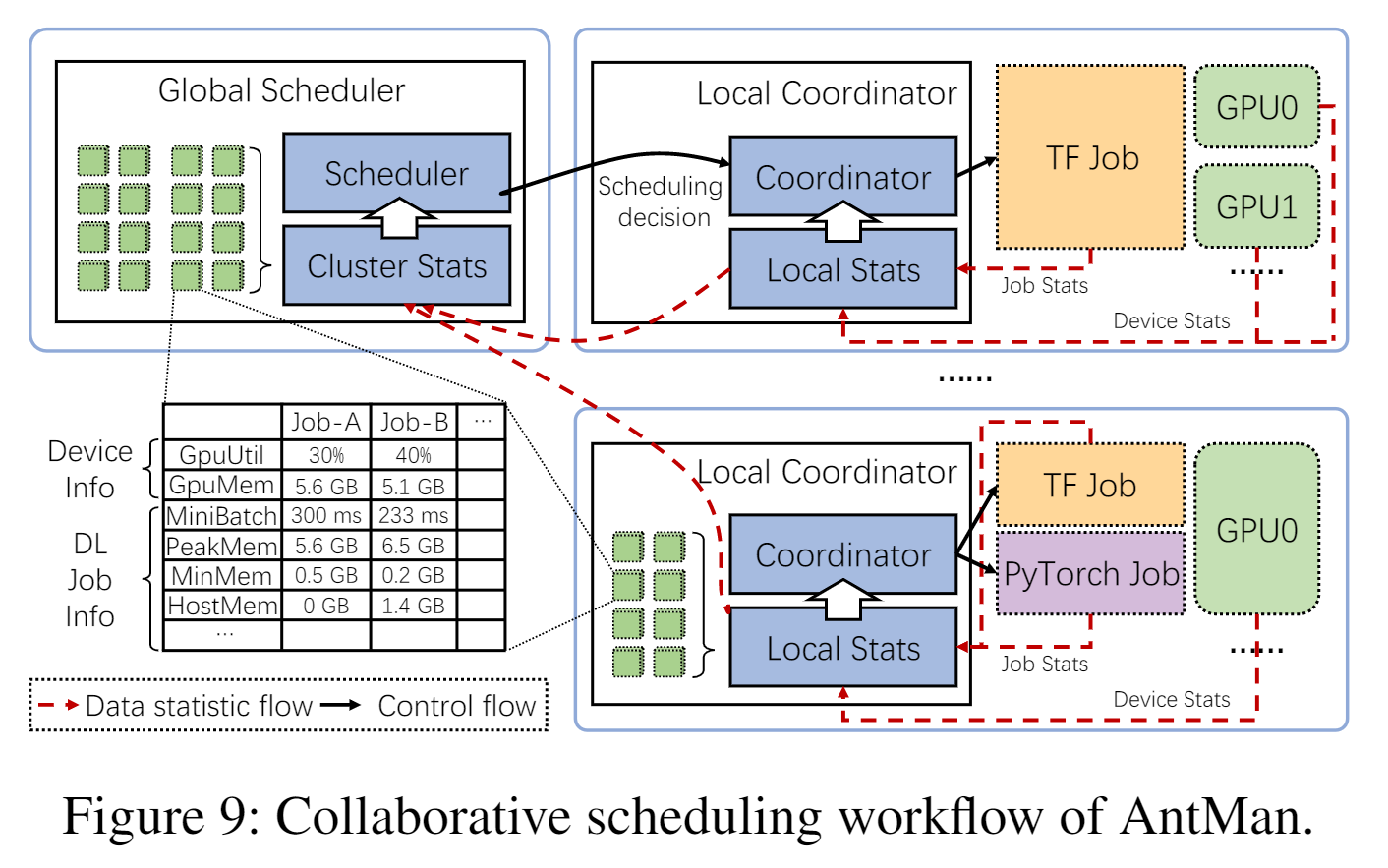

Collaborative Scheduler

As shown in Figure 9, AntMan adopts a hierarchical architecture, where a global scheduler is responsible for job scheduling. Each working server contains a local coordinator that is responsible for managing the job execution using the primitives of dynamic resource scaling through considering the statistics reported from DL frameworks.

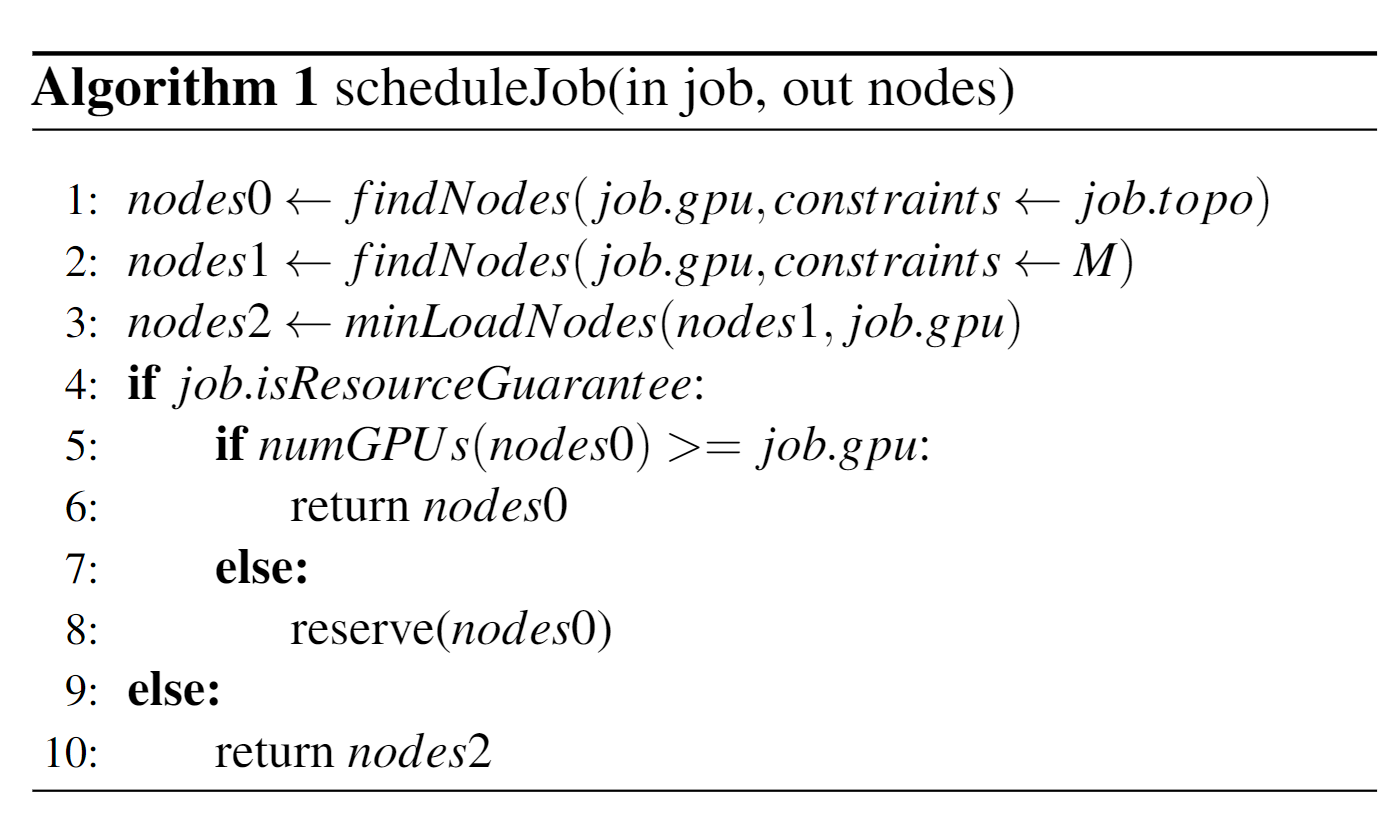

Scheduling Policy

AntMan applies different scheduling polices as shown in Algorithm 1 which considers the topology (topo), utilization (M) and load of the nodes’ GPU.

Note that, AntMan relies on the application level metric (i.e., mini-batch time) to indicate the job performance of resource-guarantee jobs. If it observes an unstable performance in the resource-guarantee job, it adopts a pessimistic strategy to limit the usage of GPU resources of other opportunistic jobs.

Evaluation

AntMan is implemented by modifying the memory allocator, executor, and interfaces in the DL framework and using Kubernetes as its scheduler.

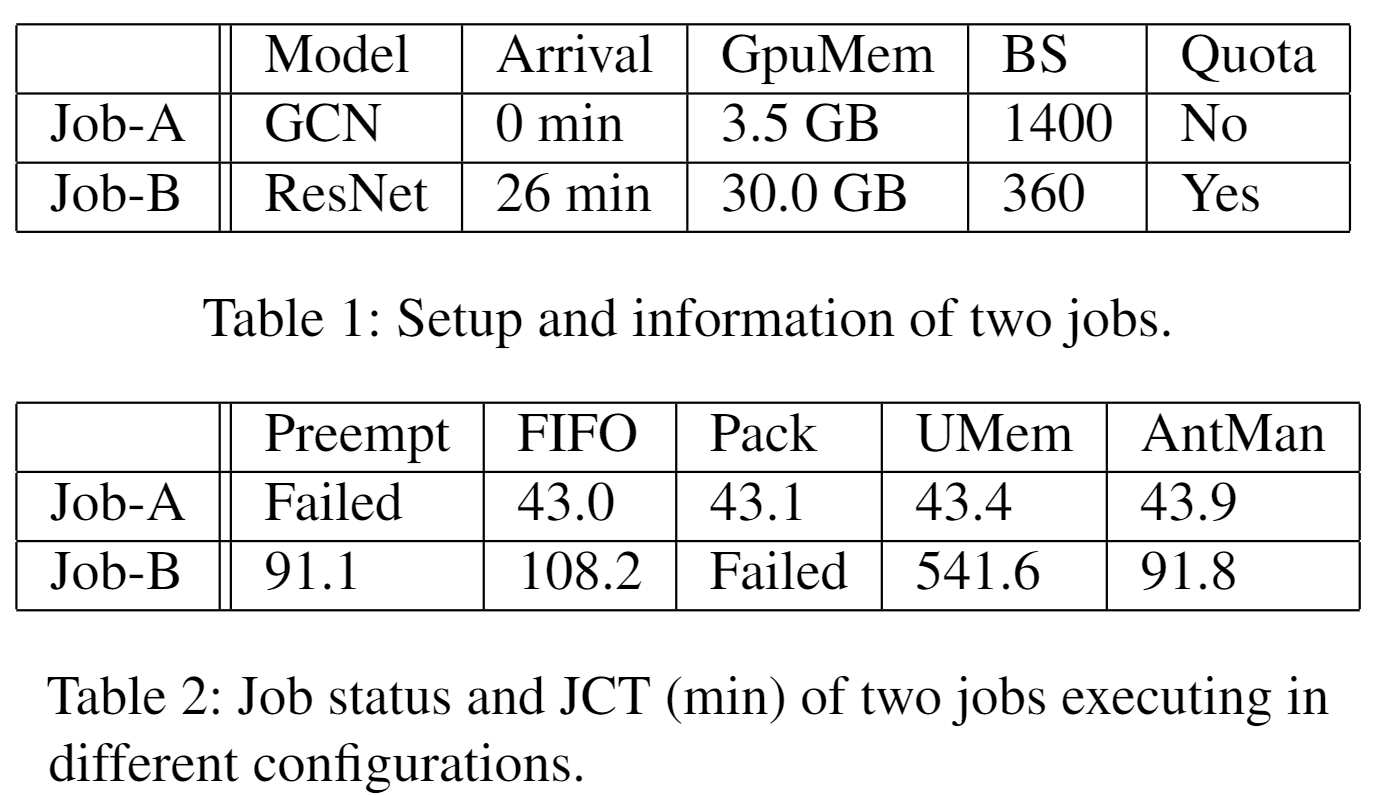

Benchmark

The information about the two jobs is shown in Table 1. As shown in Table 2, AntMan can make Job-B have a similar performance with Preempt, while both Job-A and Job-B are only slightly slower than executing exclusively.

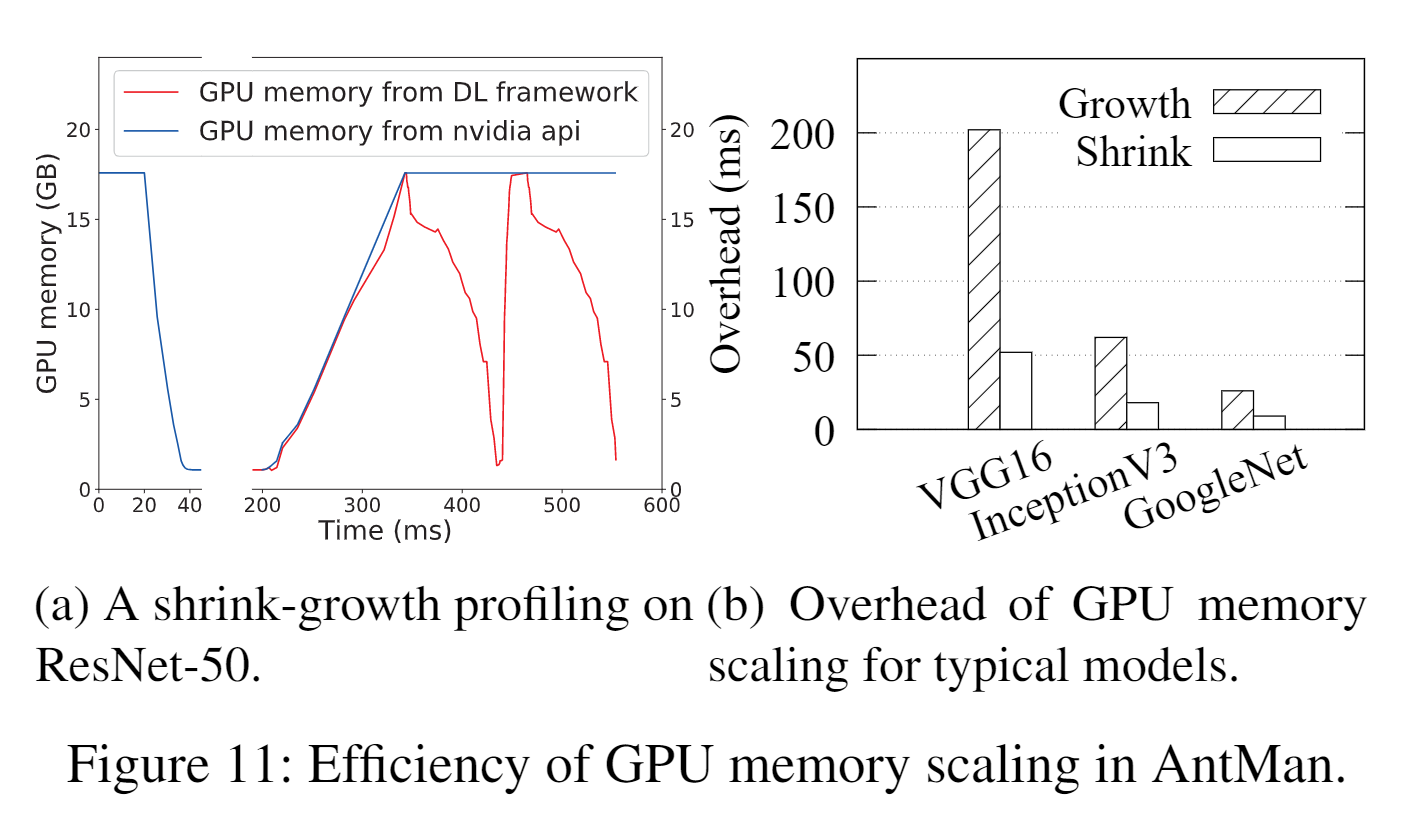

As shown in Figure 11, the overhead of the GPU memory scaling is negligible.

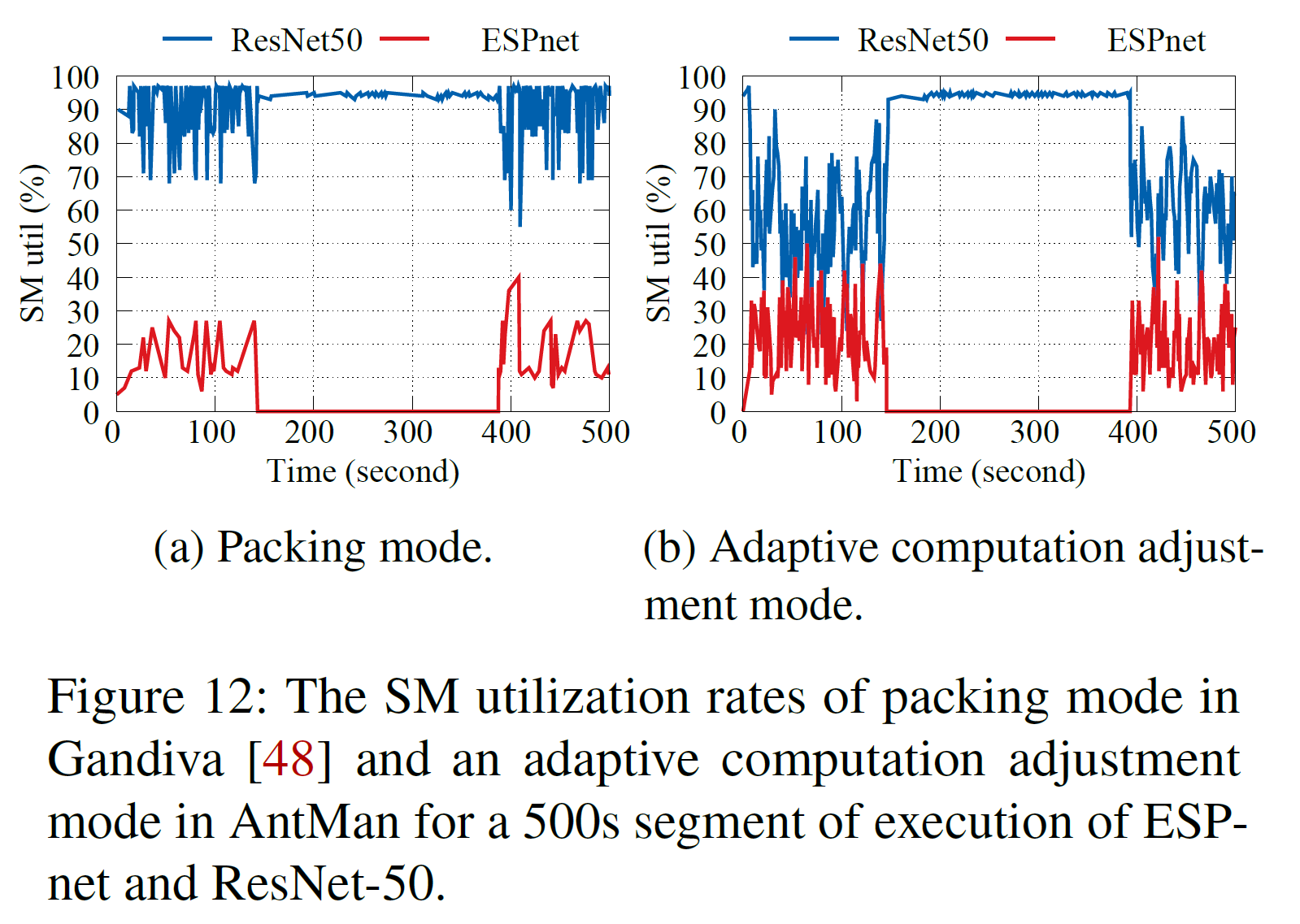

As shown in Figure 12, AntMan can leverage adaptive computation adjustment to utilize the left over resources as much as possible while still satisfying the SLA requirements. Specifically, AntMan introduces a feedback-based adjustment approach that continuously monitors the performance of resource guarantee jobs and uses performance feedbacks to adjust the GPU kernel launching frequency of opportunistic jobs.

Trace Experiment

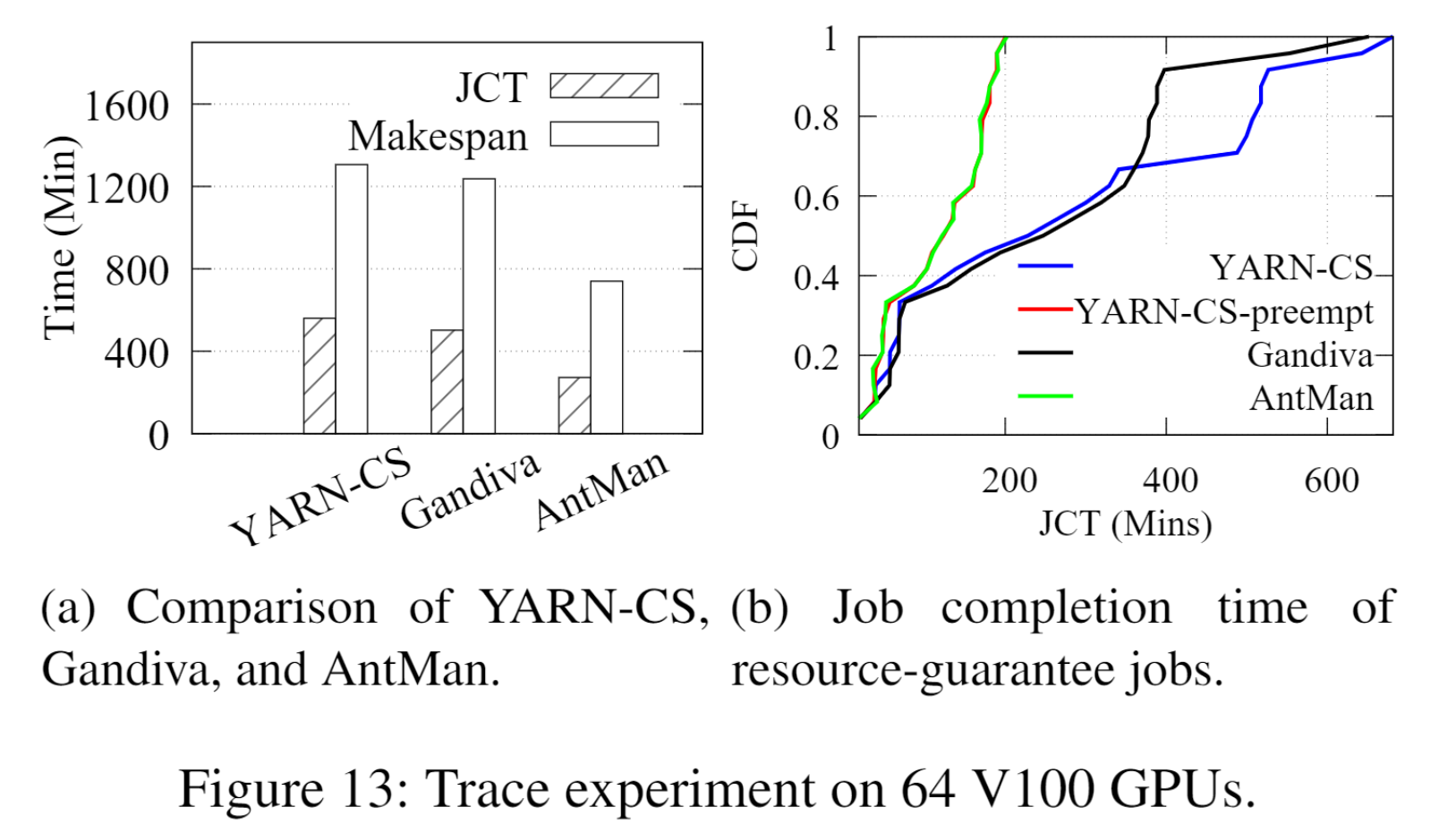

The workloads of this part include CV, NLP, speech, etc. As shown in Figure 13, AntMan has lower JCT and makespan compared with YARN-CS and Gandiva. In addition, the JCT of resources-guarantee jobs of AntMan is almost the same as YARN-CS-preempt. In other words, AntMan can provide nearly preemption performance to the resources-guarantee jobs

Cluster Experiment

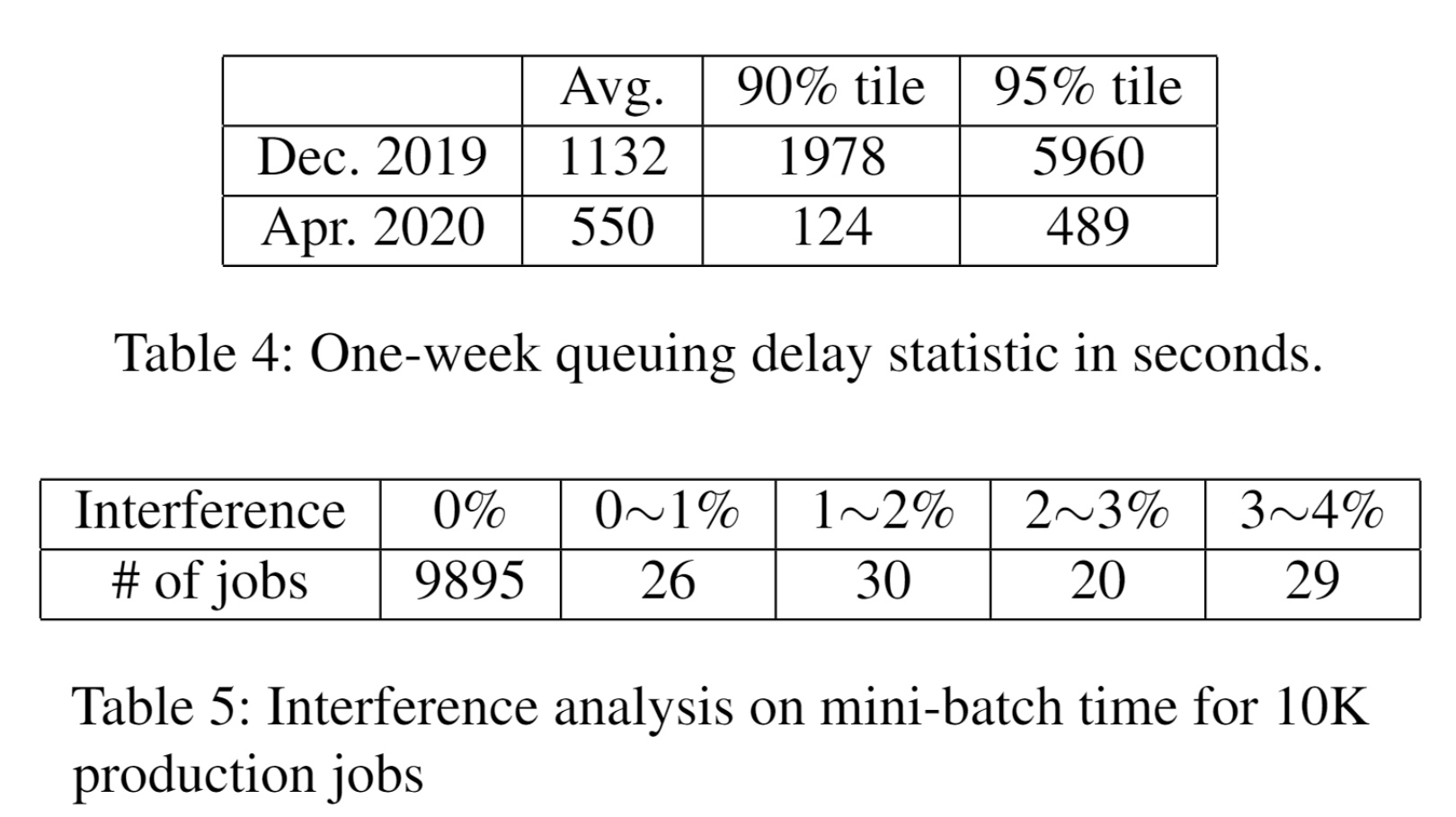

AntMan has been deployed on the production clusters of Alibaba to serve tens of thousands of daily deep learning training jobs. As shown in Table 4 and Table 5, AntMan can obviously reduce the queuing delay of the jobs and make the resource-guarantee jobs out of interference.